Luke Breuer recently commented:

You need to deal with the claim that atheism is more associated with ratio-analytic thinking styles.

Rationality is useless if it is not sound. This is what Martin Luther meant when he called reason a “whore”. Pick the wrong premises, and rationality is utterly screwed. Therefore, merely that someone is “rational” means absolutely nothing about whether that person is well-connected to reality.

Which is better:

(1) an argument which is sound but not valid

(2) an argument which is valid but not sound

? The answer, of course, is neither/unknown.

Now, if we go back to my original original point, the idea of thinking styles IS TOTALLY important and was actually where I wanted the debate to go.

Alright; how about we contrast my ‘fact’ vs. ‘truth’ ideas with your:

Jonathan Pearce: I think the goal is to have a bottom up worldview, where you establish the building bricks and see what building arises. I think top down approaches are dangerous, and I think this is what many people, particularly theists, do. They start with a conclusion, and massage evidence to fit. I will happily throw out conclusions, as I have done many times in the past, if that is where the path leads.

? This bottom-up vs. top-down discussion does seem to be very important to thinking style, no? I would also point out Wikipedia’s top-down and bottom-up design for your consideration. I have quite a bit of knowledge about that, being a software architect. I could also throw in Richard Feynman’s “What I cannot create, I cannot understand.”—a favorite quotation of mine.

To which I responded:

The first part of your comment is really about justified truths. Is it better to have a conclusion which is correct but to which you poorly reason, or an incorrect conclusion to which you have argued very well (but perhaps an axiom is incorrect)?

This is why I ask what does better mean? What is the goal?

If it is about finding the cure to a disease which is predicated upon correct truths, then having a poorly argued correct conclusion is better. If it is about fostering better critical thinking etc then perhaps the other option.

Personally, I prefer the other option of well-argued valid and sound conclusions.

The next part you already k now. I prefer bottom up because this is about making sure everything from the bottom up is sound, leaving only the axiomatic foundational brick unable to be fully (deductively) rationalised.

Conclusions are hierarchical. In other words, objective morality, for example, depends upon several other levels, including whether objective ideas in general can and do exist, and whether anything exists outside of our own heads.

Just like Descartes, I reason from what I indubitably know to be true and build from there.

Anything else opens you up more probably to cognitive biases.

This is fundamental, in many sense, to me. It is why I spend some time trying to establish the building bricks of philosophy, such as “what is an abstract idea?” So often we argue on the veneer, and so often this means that our conclusions or claims are propped up with little more than biases and bluster.

For me, the bottom up approach is by far the more justifiable one.

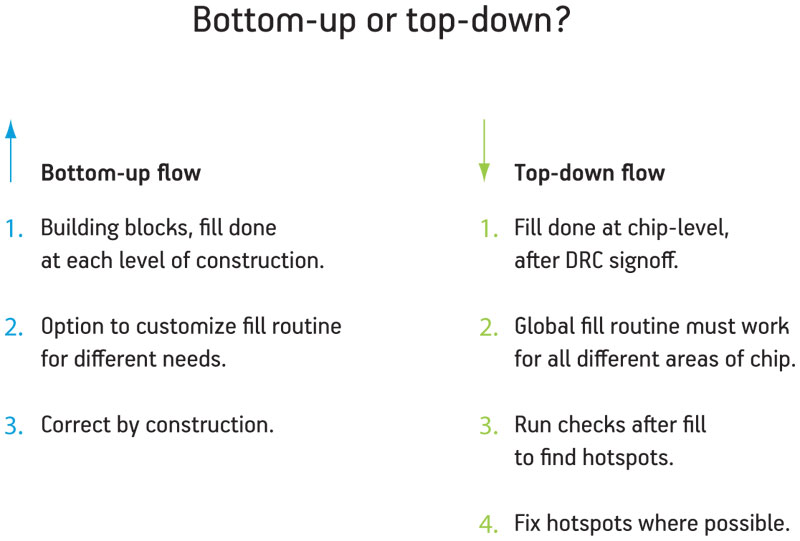

If you look at this image about chip design (or something, it doesn’t really matter), you will get my point:

Here you can see that the left-hand approach has a conclusion which is correct if the whole thing is built correctly. The importance is in the construction, which should ensure truth if built with the correct bricks. The right-hand option smacks of ad hoc. If it doesn’t seem to work (is true) then just mess around with fixing things at the last stages, working under the assumption, nothing more, that the initial building blocks and desires and plans are correct.

At the end of the day, looking down on things is pretty arrogant, and assumes that you know best.