You have to hand it to many science fiction creators for their predictions. Jules Verne foresaw rocket ships, solar sails, and trips to the moon; Aldous Huxley predicted genetic engineering and in vitro fertilization; Star Trek predicted cell phones, laptops, and even iPads. But almost everyone got the basic architecture of “future” information systems wrong, many long after they actually came into existence.

The plot of the original Star Wars films got it so wrong as to make little sense at all: missionary droids and smugglers are required just to send information. Apparently wide-scale communications are not readily available even in a civilization with droids, faster-than-light speed spacecraft and cities the size of planets. Both the original Star Trek and The Next Generation jarringly feature mainframe-type computer systems. Kirk or Picard sometimes have to go to some special terminal or room to access a computing function or database because no Enterprise has wi-fi or Wikipedia.

Distributed, ubiquitous access to an enormous portion of human knowledge was not predicted by the writers [Granted though, we have and sometimes still use mainframe+thin client systems, but these are very limited in use and are now dwarfed by distributed systems in power, utility and ubiquity]. Even once it became obvious that connectivity would proliferate across the globe and penetrate dozens of tools and products that are not computers per se, sci-fi gets it wrong in the opposite direction. Films like Hackers, The Net, Terminator (1-3), Die Hard 4, and Swordfish feature plots and plot elements in which any digital device apparently can readily access any other digital device in existence.

While a boon to hack writers the world over, this idea is just as wrong. Modern information systems tend to have connections only between entities that are needed to fulfill their function, and only in circumscribed ways. My microwave oven has a small computer that doesn’t communicate with any other digital device. My smart phone can utilize GPS signals, but only as a passive receiver, it can’t send any signal to it, let alone control it in any imaginable fashion.

In short, modern information systems offer ubiquitous access to remote systems in principle, but in fact are restricted to communicating only with the ones that serve a particular function, and communicating only in the mode conducive to that function. Until very recently (and oddly, often even after) sci-fi creators didn’t guess at this arrangement. But they might have, if they knew about computational theory of mind and the modular organization of the mind.

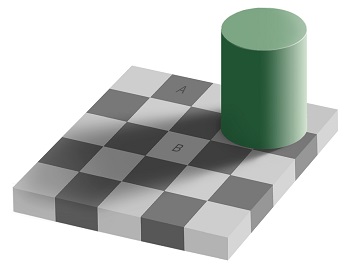

The comparison between technological and neuronal information systems should never be construed too literally, but there are striking architectural similarities. Functionally coherent bits of the mind “know” things produced by other bits. They may have uni- or bi-directional communication. Your visual cortex “tells” you that squares A and B are different colors, but your knowledge they are the same color can’t be “told” to your visual cortex. You continue to see them as different. That particular type of communication is uni-directional.

You can’t directly gain access to the information about your heart rate, blood pressure, blood glucose, temperature and other data even though parts of your brain monitor them every second. Connections are plentiful between “systems” when function depends on it: you can construct imaginative counterfactuals using information from your senses, short and long term memory, auditory and language processors, and emotional state simultaneously. The newly conjectured ideas can then be written to memory and impact many systems in turn.

Artificial and neuronal information systems have many of the same features because the features are so powerful: cheap, high-bandwidth connections and well “designed” modular systems built around a small number of functions, frequently hierarchical.

The “all powerful” mainframe and hyper-connectionist computing models have also been postulated as metaphors for how the mind is organized. The mainframe is essentially the religious soul concept (or if put more secularly, the homunculous). The hyperconnectionist model is akin to that of old-school behaviorism and more generally called (naive) connectionism. These models are as obsolete in psychology as they are in technology.

Further reading

Jerry Fodor and the modularity of mind, wiki

Why Everyone (Else) Is a Hypocrite: Evolution and the Modular Mind by Robert Kuzban

Jules Verne, wiki